We recently configure our own Kubernetes (K8s), cluster using MicroK8s and learn how we could use an operator for deploying a PostgreSQL Server within our cluster. Now we will learn how we could create k8s jobs that populate a database within our cluster.

Creating our Database

This exercise assume that we have the PostgreSQL operator installed in our cluster, if not we may need to follow the steps that we did in the previous post.

First we will delete the database that we created previously.

$ microk8s.kubectl delete postgresql movies-db-cluster

postgresql.acid.zalan.do "movies-db-cluster" deleted

$ microk8s.kubectl get postgresql

No resources found in default namespace.We will put everything that we are going to use in a directory that latter we could push to a repository, so we will start with a similar yaml that we original did movies-db.yml :

apiVersion: "acid.zalan.do/v1"

kind: postgresql

metadata:

name: movies-db-cluster

namespace: default

spec:

teamId: "movies"

volume:

size: 2Gi

numberOfInstances: 2

users:

moviesdba: # database owner

- superuser

- createdb

moviesuser: [] # roles

databases:

movies: moviesdba # dbname: owner

postgresql:

version: "11"We are requesting 2 Gibibytes for the volume on our database named movies and a admin user named moviesdba and normal user name moviesuser, and we like to have to instance, one will be our master and the other our replica.

For those not familiar with the Gibibytes (Gi) instead of Gigabytes (G) you may check the IEEE 1541-2002 by the International Electrotechnical Commission (IEC) or this article in the Wikipedia

Now we could create the database executing :

$ microk8s.kubectl create -f movies-db.yml

postgresql.acid.zalan.do/movies-db-cluster createdNow we we need to wait that our database is running, we could check until we get this output :

$ microk8s.kubectl get postgresql

NAME TEAM VERSION PODS VOLUME CPU-REQUEST MEMORY-REQUEST AGE STATUS

movies-db-cluster movies 11 2 2Gi 106s RunningAnd the inspect the nodes four our database with :

$ microk8s.kubectl get pods -l version=movies-db-cluster -L spilo-role

NAME READY STATUS RESTARTS AGE SPILO-ROLE

movies-db-cluster-0 1/1 Running 0 3m49s master

movies-db-cluster-1 1/1 Running 0 3m6s replicaCreating a simple Job

Now that we have our database we could start creating our Job but first we need to learn how Jobs work in k8s. A k8s job is a task that run until completion, that means if the job doesn’t end it will run forever.

We could tell k8s to deploy an image, base on a existing image, and run it until it’s end, for this we will use a yaml file to describe it, in this example will be : database-load.yml

apiVersion: batch/v1

kind: Job

metadata:

name: movies-load-run # the name of our job

labels:

job-group: movie-load # logical grouping

spec:

template:

metadata:

labels:

job-group: movie-load # logical grouping

spec:

containers:

- name: database-load-container

image: perl

command: ["perl" ,"-wle", "print 'hello from a Job'"]

restartPolicy: NeverFor running this job we could just do :

$ microk8s.kubectl create -f database-load.yml

job.batch/movies-load-run createdTo check the job that we have create we could do :

$ microk8s.kubectl get job/movies-load-run

NAME COMPLETIONS DURATION AGE

movies-load-run 0/1 3s 3sWe see that our job run during 3s, completions show 0/1 because is not running anymore. Let’s check the pods for our job

$ microk8s.kubectl get pod -l=job-name=movies-load-run

NAME READY STATUS RESTARTS AGE

movies-load-run-rgrz8 0/1 Completed 0 6m4sWe could see that we have a pod for our job that is not running and is complete, we could check the log for our run with :

$ microk8s.kubectl logs movies-load-run-rgrz8

hello from a JobRerun our Job

Our job is complete but we may want to run it again, we could try doing :

$ microk8s.kubectl create -f database-load.yml

Error from server (AlreadyExists): error when creating "database-load.yml": jobs.batch "movies-load-run" already existsAnd this is because that job already exist, so we could not create again, however we could delete it first :

$ microk8s.kubectl delete -f database-load.yml

job.batch "movies-load-run" deleted

$ microk8s.kubectl create -f database-load.yml

job.batch/movies-load-run createdAnd we could get the log as before :

$ microk8s.kubectl get pod -l=job-name=movies-load-run

NAME READY STATUS RESTARTS AGE

movies-load-run-t8lqw 0/1 Completed 0 3m13s

$ microk8s.kubectl logs movies-load-run-t8lqw

hello from a JobBut this is not great, there is a different way to do this, first we will delete our job:

$ microk8s.kubectl delete -f database-load.yml

job.batch "movies-load-run" deletedWe will modify our database-load.yml to use generateName instead of name :

apiVersion: batch/v1

kind: Job

metadata:

generateName: movies-load-run- # the name of our job

labels:

job-group: movie-load # logical grouping

spec:

template:

metadata:

labels:

job-group: movie-load # logical grouping

spec:

containers:

- name: database-load-container

image: perl

command: ["perl" ,"-wle", "print 'hello from a Job'"]

restartPolicy: NeverAnd now let’s do a couple of runs :

$ microk8s.kubectl create -f database-load.yml

job.batch/movies-load-run-ps4nn created

$ microk8s.kubectl create -f database-load.yml

job.batch/movies-load-run-8hm9h createdNow we could get our jobs with :

$ microk8s.kubectl get jobs --selector=job-group=movie-load

NAME COMPLETIONS DURATION AGE

movies-load-run-8hm9h 1/1 3s 90s

movies-load-run-ps4nn 1/1 3s 92sAnd the pods that they run with :

$ microk8s.kubectl get pods --selector=job-group=movie-load

NAME READY STATUS RESTARTS AGE

movies-load-run-8hm9h-m7rd5 0/1 Completed 0 3m22s

movies-load-run-ps4nn-dswxg 0/1 Completed 0 3m24sWe are doing this using the custom label that we have add named job-group, and we could use it even for get the logs :

$ microk8s.kubectl logs --timestamps --selector=job-group=movie-load

2019-12-18T07:52:07.434994661Z hello from a Job

2019-12-18T07:52:05.793251225Z hello from a JobNe could delete our jobs using as well the group :

$ microk8s.kubectl delete jobs --selector=job-group=movie-load

job.batch "movies-load-run-8hm9h" deleted

job.batch "movies-load-run-ps4nn" deleteOne interesting fact is that when we run a job a pod is created and remain on hour cluster until is restarted, we may not want to do that for freeing cluster resources so we want may want to delete the jobs.

There is a alpha feature of k8s, TTL Controller for Finished Resources, that allow that, but since is alpha is may change in the future so we are not going to use it, for now.

Visualizing our Jobs in Kibana

Since we want to delete our job if we do it so we could not use kubectl to view our past logs, however we could use Kibana, as it was setup in our MicroK8s, for visualizing our past jobs.

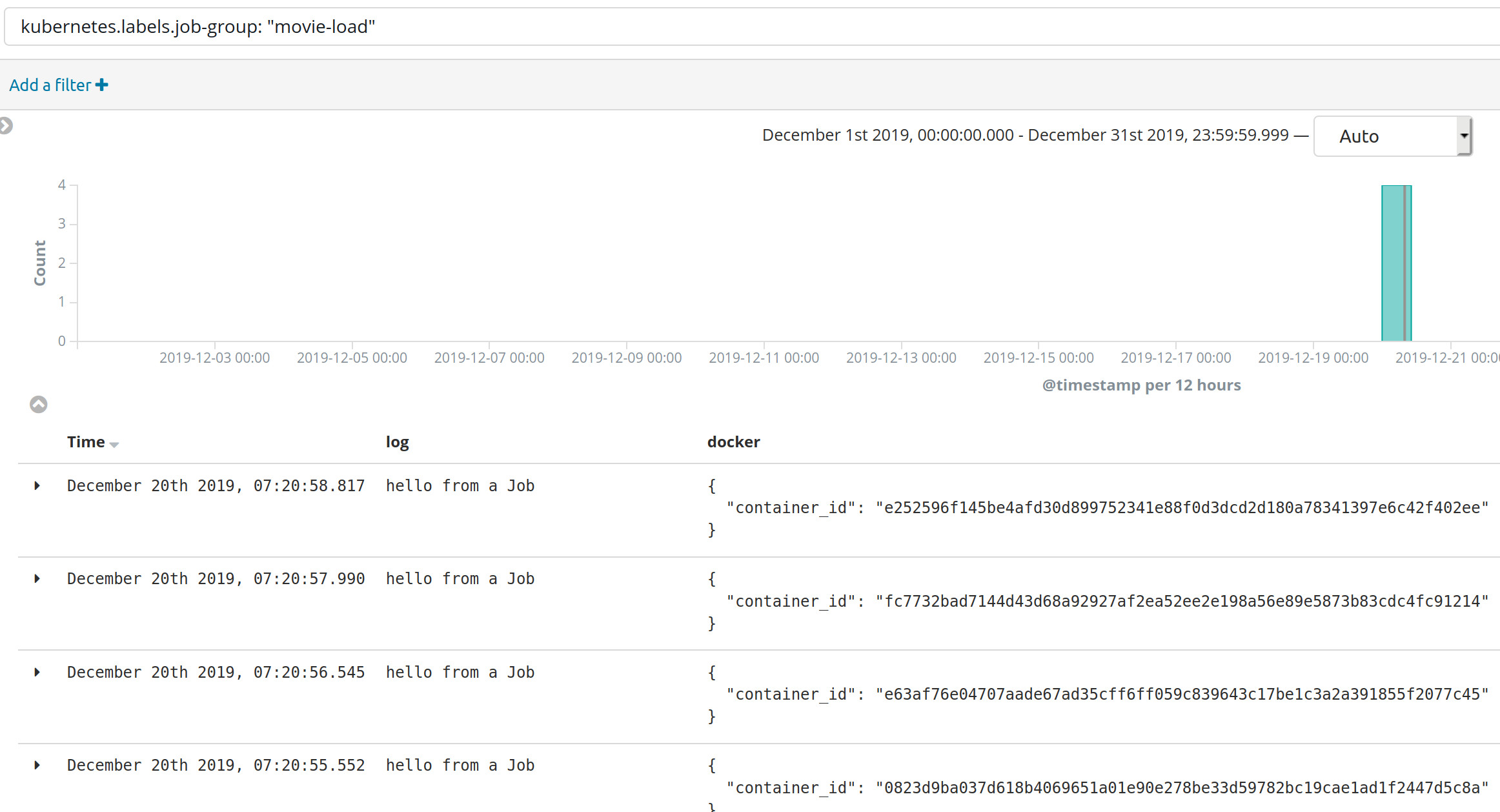

For example we could just filter using the simple expression : kubernetes.labels.job-group: “movie-load”

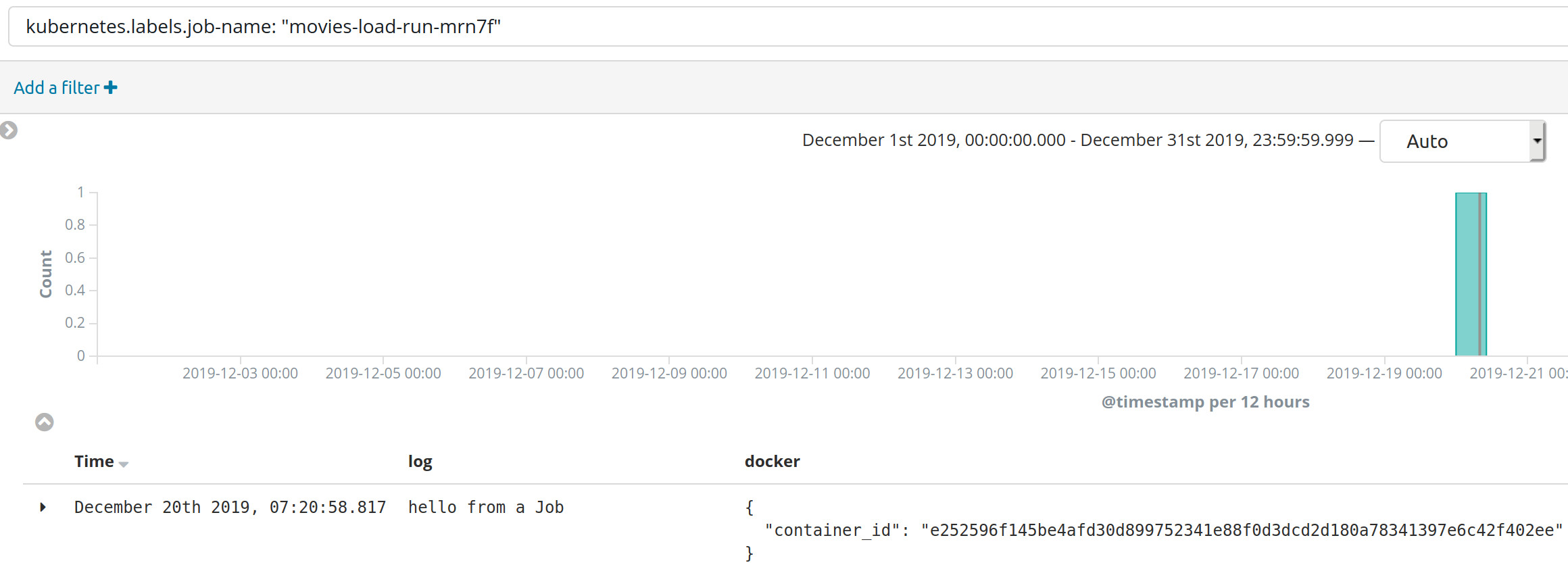

Or we could explore a particular run with : kubernetes.labels.job-name: “movies-load-run-mrn7f”

Creating our own image

For creating our job so far we have use an existing image, however we will need to create our own in order to add the dependencies that we will need, such the PostgreSQL client, but we need a tool for creating images and we are going to use docker.

$ sudo apt-get install \

apt-transport-https \

ca-certificates \

curl \

gnupg-agent \

software-properties-common

$ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

$ sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu bionic stable"

$ sudo apt-get update

$ sudo apt-get install docker-ce docker-ce-cli containerd.ioNote : there is a bug in linux mint 19.1 that the add-apt-repository does not work, you could add it manually as describe here.

We will need as well to add our user to the docker group so we do not need to sudo :

$ sudo usermod -a -G docker $USERAfter this we should restart our system.

Them we to configure in docker the registry that we have in our MicroK8s cluster modifying the file /etc/docker/daemon.json as superuser :

{

"insecure-registries" : ["localhost:32000"]

}After that we will need to restart the docker engine :

$ sudo systemctl restart dockerNow we will start to create our Dockerfile :

FROM ubuntu:latest

ADD job.sh /usr/src/job.sh

RUN chmod +x /usr/src/job.sh

CMD ["/usr/src/job.sh"]Now we will could build our container with :

$ docker build . -t localhost:32000/movie-load:registryIf we like to test our docker we could do :

$ docker run localhost:32000/movie-load:registry

hello from a shell script in a dockerNow we will push our docker into the registry

$ docker push localhost:32000/movie-loadWe need to modify the database-load.yml for our job to refer to the new image:

apiVersion: batch/v1

kind: Job

metadata:

generateName: movies-load-run- # the name of our job

labels:

job-group: movie-load # logical grouping

spec:

template:

metadata:

labels:

job-group: movie-load # logical grouping

spec:

containers:

- name: database-load-container

image: localhost:32000/movie-load:registry

imagePullPolicy: Always

restartPolicy: NeverNow we could run our job as before :

$ microk8s.kubectl create -f database-load.yml And get our logs as we did previously :

$ microk8s.kubectl logs --timestamps --selector=job-group=movie-load

2019-12-21T10:48:37.625914657Z hello from a shell script in a dockerTo make the things simple lets create an script name build.sh to build and push our docker :

#!/bin/sh -

docker build . -t localhost:32000/movie-load:registry

docker push localhost:32000/movie-loadLets make it executable with :

$ chmod +x build.shNow for build and push our docker we could just do :

$ ./build.shAdding a PostgreSQL Client

Since our job will load data from a database we will add the PostgreSQL client our Dockerfile :

FROM ubuntu:latest

RUN apt-get update

RUN apt-get install postgresql-client -y

ADD job.sh /usr/src/job.sh

RUN chmod +x /usr/src/job.sh

CMD ["/usr/src/job.sh"]And we will change our job to test the client :

#!/bin/sh -

set -o errexit

psql --version

echo "job completed"

return 0For making the thing simpler we will create a new script named run.sh ::

#!/bin/sh -

microk8s.kubectl delete jobs --selector=job-group=movie-load

microk8s.kubectl create -f database-load.ymlLets make it executable as well :

$ chmod +x run.shNow we could easily build and run our changes with :

$ ./build.sh

Sending build context to Docker daemon 7.168kB

Step 1/6 : FROM ubuntu:latest

---> 549b9b86cb8d

Step 2/6 : RUN apt-get update

---> Using cache

---> dcad669c2dc7

Step 3/6 : RUN apt-get install postgresql-client -y

---> Using cache

---> 653870d02d92

Step 4/6 : ADD job.sh /usr/src/job.sh

---> Using cache

---> f5eb8c9877f7

Step 5/6 : RUN chmod +x /usr/src/job.sh

---> Using cache

---> 2f1342e99195

Step 6/6 : CMD ["/usr/src/job.sh"]

---> Using cache

---> 2c93a1f139ef

Successfully built 2c93a1f139ef

Successfully tagged localhost:32000/movie-load:registry

The push refers to repository [localhost:32000/movie-load]

a05f1dc51a49: Layer already exists

0cabfb9c787c: Layer already exists

422cf4890fa0: Layer already exists

918efb8f161b: Layer already exists

27dd43ea46a8: Layer already exists

9f3bfcc4a1a8: Layer already exists

2dc9f76fb25b: Layer already exists

registry: digest: sha256:a017e6185297983a583d9e4caf08524e961e75672e3a98cde959e8b3c675010a size: 1990

$ ./run.sh

job.batch "movies-load-run-llftf" deleted

job.batch/movies-load-run-9qrfd created

$ ./microk8s.kubectl get pods --selector=job-group=movie-load

2019-12-21T11:29:41.615865967Z psql (PostgreSQL) 10.10 (Ubuntu 10.10-0ubuntu0.18.04.1)

2019-12-21T11:29:41.616010932Z job completedConnecting to our database

In order to connect to our database we require credentials so first we will inject them in our job changing our database-load.yml :

apiVersion: batch/v1

kind: Job

metadata:

generateName: movies-load-run- # the name of our job

labels:

job-group: movie-load # logical grouping

spec:

template:

metadata:

labels:

job-group: movie-load # logical grouping

spec:

containers:

- name: database-load-container

image: localhost:32000/movie-load:registry

imagePullPolicy: Always

volumeMounts:

- name: db-credentials

mountPath: "/etc/db-credentials"

readOnly: true

restartPolicy: Never

volumes:

- name: db-credentials

secret:

secretName: moviesdba.movies-db-cluster.credentialsNow we will modify our script job.sh to use it :

#!/bin/sh -

set -o errexit

cd /usr/src

export PGHOST=$MOVIES_DB_CLUSTER_SERVICE_HOST

export PGPORT=$MOVIES_DB_CLUSTER_SERVICE_PORT_POSTGRESQL

export PGDATABASE="movies"

export PGUSER=`cat /etc/db-credentials/username`

export PGPASSWORD=`cat /etc/db-credentials/password`

psql < init.sql

echo "job completed"

return 0This script will run a file init.sql into our database, let’s create it :

SELECT 'hello from a SQL' as message, current_timestamp as date;But we need to add this file into our Dockerfile :

FROM ubuntu:latest

RUN apt-get update

RUN apt-get install postgresql-client -y

ADD job.sh /usr/src/job.sh

RUN chmod +x /usr/src/job.sh

ADD init.sql /usr/src/init.sql

CMD ["/usr/src/job.sh"]Finally we could build and run our job :

$ ./build.sh

$ ./run.sh

$ microk8s.kubectl get pods --selector=job-group=movie-load

2019-12-21T12:16:28.063280527Z message | date

2019-12-21T12:16:28.063298709Z ------------------+-------------------------------

2019-12-21T12:16:28.063301164Z hello from a SQL | 2019-12-21 12:16:28.062985+00

2019-12-21T12:16:28.063302989Z (1 row)

2019-12-21T12:16:28.063304716Z

2019-12-21T12:16:28.063652329Z job completedWith this running we could add to our init.sql the creation of the tables for our database :

DROP TABLE IF EXISTS movies;

CREATE TABLE movies (

id serial primary key,

title text not null,

genres varchar(1024)

);

DROP TABLE IF EXISTS ratings;

CREATE TABLE ratings (

id serial primary key,

user_id int not null,

movie_id int not null,

rating float not null,

created bigint not null

);

vacuum full;We will create some tables from our jobs using this SQL statements, let’s build and run our job :

$ ./build.sh

$ ./run.sh

$ microk8s.kubectl get pods --selector=job-group=movie-load

NOTICE: table "movies" does not exist, skipping

CREATE TABLE

NOTICE: table "ratings" does not exist, skipping

CREATE TABLE

VACUUM

job completeWe got some messages because our tables didn’t exist but if we run our job again :

$ ./run.sh

$ microk8s.kubectl get pods --selector=job-group=movie-load

DROP TABLE

CREATE TABLE

DROP TABLE

CREATE TABLE

VACUUM

job completedDownload a file with movies data

Now that we are able to create our tables we will download movies data from movielens, but first let’s modify our database-load.yml to have a volume to temporary store this data using emptyDir :

apiVersion: batch/v1

kind: Job

metadata:

generateName: movies-load-run- # the name of our job

labels:

job-group: movie-load # logical grouping

spec:

template:

metadata:

labels:

job-group: movie-load # logical grouping

spec:

containers:

- name: database-load-container

image: localhost:32000/movie-load:registry

imagePullPolicy: Always

volumeMounts:

- name: db-credentials

mountPath: "/etc/db-credentials"

readOnly: true

- name: download

mountPath: "/tmp/download"

readOnly: false

restartPolicy: Never

volumes:

- name: db-credentials

secret:

secretName: moviesdba.movies-db-cluster.credentials

- name : download

emptyDir: {}An empty dir is able to store information for our pod at long at is runs, and disappear when our pod/job ends, but will we need more tools in our container so we will first modify our Dockerfile adding wget and unzip:

FROM ubuntu:latest

RUN apt-get update

RUN apt-get install postgresql-client -y

RUN apt-get install wget -y

RUN apt-get install unzip

ADD job.sh /usr/src/job.sh

RUN chmod +x /usr/src/job.sh

ADD init.sql /usr/src/init.sql

CMD ["/usr/src/job.sh"]Now we will modify our job to download our movies file updating our job.sh :

#!/bin/sh -

set -o errexit

echo "downloading file.."

cd /tmp/download

wget -q -c http://files.grouplens.org/datasets/movielens/ml-latest.zip

echo "file downloaded"

echo "unzip file.."

unzip -o ml-latest.zip

echo "file unziped"

echo "running init script.."

export PGHOST=$MOVIES_DB_CLUSTER_SERVICE_HOST

export PGPORT=$MOVIES_DB_CLUSTER_SERVICE_PORT_POSTGRESQL

export PGDATABASE="movies"

export PGUSER=`cat /etc/db-credentials/username`

export PGPASSWORD=`cat /etc/db-credentials/password`

psql < /usr/src/init.sql

echo "init script completed"

echo "job completed"

return 0Now we could run our job but we will follow the log since will take longer :

$ ./build.sh

$ ./run.sh

$ microk8s.kubectl logs --selector=job-group=movie-load --timestamps -f

2019-12-21T17:10:02.282464543Z downloading file..

2019-12-21T17:11:39.597312604Z Archive: ml-latest.zip

2019-12-21T17:11:39.597515143Z creating: ml-latest/

2019-12-21T17:11:39.632152585Z inflating: ml-latest/links.csv

2019-12-21T17:11:39.842122169Z inflating: ml-latest/tags.csv

2019-12-21T17:11:39.842264413Z inflating: ml-latest/genome-tags.csv

2019-12-21T17:11:43.479114191Z inflating: ml-latest/ratings.csv

2019-12-21T17:11:43.479184778Z inflating: ml-latest/README.txt

2019-12-21T17:11:45.081158518Z inflating: ml-latest/genome-scores.csv

2019-12-21T17:11:45.096730053Z inflating: ml-latest/movies.csv

2019-12-21T17:11:45.096873562Z file downloaded

2019-12-21T17:11:45.096880746Z running init script..

2019-12-21T17:11:45.139973302Z DROP TABLE

2019-12-21T17:11:45.156706409Z CREATE TABLE

2019-12-21T17:11:45.164718047Z DROP TABLE

2019-12-21T17:11:45.177663221Z CREATE TABLE

2019-12-21T17:11:46.481417622Z VACUUM

2019-12-21T17:11:46.484035911Z init script completed

2019-12-21T17:11:46.484089699Z job completedUpload csv data into our database

Now that we have the data download we will create an script that insert the csv data into our database, we will name load.sql :

-- movies.csv: movieId,title,genres

\copy movies (id, title, genres) FROM 'ml-latest/movies.csv' WITH (DELIMITER ',', FORMAT CSV, HEADER true, ESCAPE '"', ENCODING 'UTF-8');

-- ratings.csv: userId,movieId,rating,timestamp

\copy ratings (user_id, movie_id, rating, created) FROM 'ml-latest/ratings.csv' WITH (DELIMITER ',', FORMAT CSV, HEADER true, ESCAPE '"', ENCODING 'UTF-8');We need to add this new SQL script into our Dockerfile :

FROM ubuntu:latest

RUN apt-get update

RUN apt-get install postgresql-client -y

RUN apt-get install wget -y

RUN apt-get install unzip

ADD job.sh /usr/src/job.sh

RUN chmod +x /usr/src/job.sh

ADD init.sql /usr/src/init.sql

ADD load.sql /usr/src/load.sql

CMD ["/usr/src/job.sh"]After this we need to modify our job.sh to run our new sql :

#!/bin/sh -

set -o errexit

echo "downloading file.."

cd /tmp/download

wget -q -c http://files.grouplens.org/datasets/movielens/ml-latest.zip

echo "file downloaded"

echo "unzip file.."

unzip -o ml-latest.zip

echo "file unziped"

export PGHOST=$MOVIES_DB_CLUSTER_SERVICE_HOST

export PGPORT=$MOVIES_DB_CLUSTER_SERVICE_PORT_POSTGRESQL

export PGDATABASE="movies"

export PGUSER=`cat /etc/db-credentials/username`

export PGPASSWORD=`cat /etc/db-credentials/password`

echo "running init script.."

psql < /usr/src/init.sql

echo "init script completed"

echo "running load script.."

psql < /usr/src/load.sql

echo "load script completed"

echo "job completed"

return 0Now we could run our job to load our database:

$ ./build.sh

$ ./run.sh

$ microk8s.kubectl logs --selector=job-group=movie-load --timestamps -f

2019-12-21T17:28:33.973743423Z downloading file..

2019-12-21T17:30:12.031149113Z file downloaded

2019-12-21T17:30:12.031203659Z unzip file..

2019-12-21T17:30:12.034132763Z Archive: ml-latest.zip

2019-12-21T17:30:12.03426501Z creating: ml-latest/

2019-12-21T17:30:12.043329834Z inflating: ml-latest/links.csv

2019-12-21T17:30:12.241631499Z inflating: ml-latest/tags.csv

2019-12-21T17:30:12.241778497Z inflating: ml-latest/genome-tags.csv

2019-12-21T17:30:15.845676942Z inflating: ml-latest/ratings.csv

2019-12-21T17:30:15.845749788Z inflating: ml-latest/README.txt

2019-12-21T17:30:17.440092559Z inflating: ml-latest/genome-scores.csv

2019-12-21T17:30:17.455591045Z inflating: ml-latest/movies.csv

2019-12-21T17:30:17.455835049Z file unziped

2019-12-21T17:30:17.456391292Z running init script..

2019-12-21T17:30:17.482454366Z DROP TABLE

2019-12-21T17:30:17.492437203Z CREATE TABLE

2019-12-21T17:30:17.495135958Z DROP TABLE

2019-12-21T17:30:17.502169062Z CREATE TABLE

2019-12-21T17:30:18.880631358Z VACUUM

2019-12-21T17:30:18.883385958Z init script completed

2019-12-21T17:30:18.883437157Z running load script..

2019-12-21T17:30:19.025907717Z COPY 58098

2019-12-21T17:32:29.037196091Z COPY 27753444

2019-12-21T17:32:29.037863671Z load script completed

2019-12-21T17:32:29.037873093Z job completedThis job has load 58k movies and 2.7M ratings, in a 2GB file into our database in about 4 minutes, 2 minutes just to import the data, not bat at all.

Exploring the loaded data

Lest explore the data using PSQL, we will login in our server with our moviesdba user so we need to get it password, we could get it with:

$ microk8s.kubectl get secret moviesdba.movies-db-cluster.credentials -o 'jsonpath={.data.password}' | base64 -d

9oYUFcamSKwjB5Yrg099glLHdqg8C1IkScRfd5TeHTisiuj23FQrx3YEW6fB3ctJWe will forward the postgres port 5432 on our master to our localhost port 6432, this will run until we do ctrl+c :

$ microk8s.kubectl port-forward movies-db-cluster-0 6432:5432

Forwarding from 127.0.0.1:6432 -> 5432

Forwarding from [::1]:6432 -> 5432Finally we can connect to our database with with the provide user and password using psql in another shell :

$ psql -h localhost -p 6432 -U moviesdba movies

Password for user moviesdba:

psql (11.6 (Ubuntu 11.6-1.pgdg18.04+1), server 11.5 (Ubuntu 11.5-1.pgdg18.04+1))

SSL connection (protocol: TLSv1.3, cipher: TLS_AES_256_GCM_SHA384, bits: 256, compression: off)

Type "help" for help.

movies=# SELECT * FROM movies LIMIT 10;

id | title | genres

----+------------------------------------+---------------------------------------------

1 | Toy Story (1995) | Adventure|Animation|Children|Comedy|Fantasy

2 | Jumanji (1995) | Adventure|Children|Fantasy

3 | Grumpier Old Men (1995) | Comedy|Romance

4 | Waiting to Exhale (1995) | Comedy|Drama|Romance

5 | Father of the Bride Part II (1995) | Comedy

6 | Heat (1995) | Action|Crime|Thriller

7 | Sabrina (1995) | Comedy|Romance

8 | Tom and Huck (1995) | Adventure|Children

9 | Sudden Death (1995) | Action

10 | GoldenEye (1995) | Action|Adventure|Thriller

(10 rows)

movies=# SELECT * FROM ratings LIMIT 10;

id | user_id | movie_id | rating | created

----+---------+----------+--------+------------

1 | 1 | 307 | 3.5 | 1256677221

2 | 1 | 481 | 3.5 | 1256677456

3 | 1 | 1091 | 1.5 | 1256677471

4 | 1 | 1257 | 4.5 | 1256677460

5 | 1 | 1449 | 4.5 | 1256677264

6 | 1 | 1590 | 2.5 | 1256677236

7 | 1 | 1591 | 1.5 | 1256677475

8 | 1 | 2134 | 4.5 | 1256677464

9 | 1 | 2478 | 4 | 1256677239

10 | 1 | 2840 | 3 | 1256677500

(10 rows)

movies=# \qConclusions

We this we have complete this article, we have learn tons of new concepts, tools and information to create our own jobs that could run in our cluster and now we have a database populated with data that we will be used in further examples.

If you like to download the scripts for this example you could grab it from this github repository.

Note : 22 Dec 2019 : I’ve update the SQL scripts to add some foreign keys and indexes to speed up queries on the database, they are updated in the repository

Resources

- https://grouplens.org/datasets/movielens/

- F. Maxwell Harper and Joseph A. Konstan. 2015. The MovieLens Datasets: History and Context. ACM Transactions on Interactive Intelligent Systems (TiiS) 5, 4: 19:1–19:19.

- https://github.com/bozaro/tech-db-lectures/tree/master/scripts/movielens

- https://kubernetes.io/docs/concepts/workloads/controllers/jobs-run-to-completion/

- https://github.com/containers/buildah/blob/master/install.md

- https://docs.docker.com/install/linux/docker-ce/ubuntu/

- https://forums.linuxmint.com/viewtopic.php?t=300469

- https://microk8s.io/docs/registry-built-in

- https://kubernetes.io/docs/concepts/configuration/secret/

- https://kubernetes.io/docs/concepts/storage/volumes/